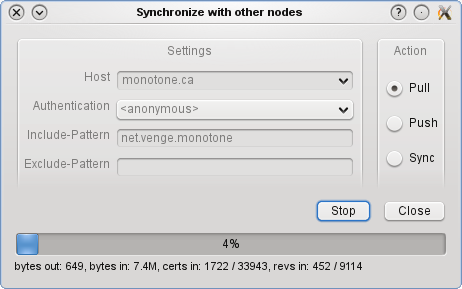

I’ve recently packaged monotone-viz 1.0.2 for MacPorts (and soon also for openSUSE), a program to display monotone’s DAG of revisions and their properties. This becomes very handy if you need to do a complex (asynchronous) merge or you want to know what exactly monotone has merged together for you. One example is the graph of the “merge fest” we’ve had in spring 2008 for the last summit you see on the right.

(Source: monotone website)

Merging in monotone is actually quite robust; while I’ve had a lot of “fuzzy” feelings in the past when doing complex merges with subversion or even CVS, merging in monotone is a no-brainer. It most of the time does exactly what you want it to do. One exception here is the handling of deleted files however, also known as “die-die-die” merge fallout: If you merge together two distinct development lines where one file has been edited on the left side and deleted on the right side, the deletion always wins over the edit, and there is absolutely nothing you can do against it (well, despite re-adding the file after merge and loosing the file’s previous history). Thankfully this is not such a common use case and keeping an “Attic” directory where deleted, but possibly revivable files reside is the medium-term solution, until someone picks up the topic again.

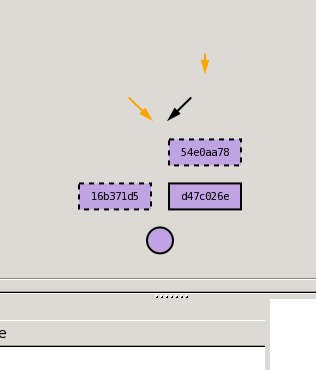

But back to monotone-viz, I couldn’t fix one problem with monotone-viz on MacPorts: It doesn’t properly draw the arrows on the graph, but rather puts them above the revisions, like this:

I’ve already asked the author about it, but he couldn’t find out whats wrong, so I suspect something is wrong with my gtk+ setup. If you have a hint for me where to look at, give me a pointer, I’d be very thankful. And if you tell me that it works correctly for you, then even better, drop me a note as well. I’ve uploaded a test monotone database with a simple merge to test the behaviour. Thanks!

[Update: As this bug points out the render problem comes from Graphviz’ dot program – hopefully the patch will made it into a new release shortly.]