I meant to write about this for a very long time, but never actually came around and did it, mostly because of time constraints. But here we are, let’s go.

What is Magnet?

Magnet is a Java library that allows you to apply dependency injection (DI), more specifically Dependency Inversion, in your Java / Kotlin application.

Why another DI library?

Traditionally there have been many libraries in the past and there are even in the present that do this job. In the mobile area on Android where I mostly work on, all started out with Roboguice (a Android-friendly version of Googles Guice), then people migrated to Square’s Dagger and later Google picked up once again and created Dagger2 that is still in wide use in countless applications.

I have my own share of experience with Dagger2 from the past; the initial learning curve was steep, but once you was into it enough it worked out pretty well, except for a few nuisances:

- Complexity – The amount of generated code and the reason why sometimes this code generation failed because of an error on your side is hard to grasp. While literally all code is generated for you, navigating between these generated parts proved to be very hard. On the other hand understanding some of the errors the Dagger2 compiler spit out in case you missed an annotation somewhere is, to put it mildly, not easy either.

- Boilerplate – Dagger2 differentiates between Modules, Components, Subcomponents, Component relations, Providers, Custom Factories and what not and comes with a variety of different annotations that control the behavior of all these things. This is not only adding complexity, but because of the nature of the library you have to do a lot of footwork to get your first injection available somewhere. Have you ever asked yourself for example if there is a real need to have both, components and modules, in Dagger2?

Now this criticism is not new at all and with the advent of Kotlin on Android other projects emerged that try to provide an alternative to Dagger2, most prominently Kodein and Koin. However, when I played around with those it felt they missed something:

- In Kodein I disliked that I had to pass kind-of a god object around to get my dependencies in place. Their solution felt more like a service locator implementation than a DI solution, as I was unable to have clean constructors without DI-specific parameters like I was used to from Dagger2 and others

- In Koin I disliked that I had to basically wire all my dependencies together by hand; clearly this is the task that the DI library should do for me!

Looking for alternatives I stumbled upon Magnet. And I immediately fell in love with it.

Magnet Quick-Start

To get up on speed, let’s compare Magnet with Dagger2, by looking at the specific terms and things both libraries use.

| Dagger2 | Magnet |

| Component | Scope |

| Subcomponent | – |

| Module | – |

@Inject | @Instance on class level |

@Provides | @Instance on class level or provide method |

@Binds | @Instance on class level or provide method |

@Component | – |

@Singleton | @Instance with scoping = TOPMOST |

@Named("...") | @Instance / bind() with classifier = "..." |

dagger.Lazy<Foo> | Kotlin Lazy<Foo> |

dagger.Provider<Foo> | @Instance with scoping = UNSCOPED |

| Dagger Android | Custom implementation needed, like this |

Don’t be afraid, we’ll discuss everything above in detail.

Initial Setup

Magnet has a compiler and a runtime that you need to add as dependencies to each application module you’d like to use Magnet with:

dependencies {

implementation "de.halfbit:magnet-kotlin:3.3-rc7"

kapt "de.halfbit:magnet-processor:3.3-rc7"

}

The magnet-kotlin artifact pulls in the main magnet dependency transitively and adds a couple of useful Kotlin extension functions and helpers on top of it. The annotation processor magnet-processor generates the glue code to be able to construct your object tree later on. Besides that there are other artifacts available, which we’ll come back to later on.

Now that the dependencies are in place, Magnet needs an assembly point in your application to work. This tells Magnet where it should create its index of injectable things in your application, basically a flat list of all features included in the app inside of its gradle dependencies section. The assembly point can be written as an interface that you annotate with Magnet’s @Registry annotation:

import magnet.Registry

@Registry

interface Assembly

The main application module is the module that usually contains this marker interface, but it could be as well the main / entry module of your library.

About scopes

Scopes in Magnet act similar like Components in Dagger2. They can be seen as “bags of dependencies” by holding references to objects that previously have been provisioned. Scopes can contain child scopes, which in turn, can again contain child scopes themselves.

There is no limit how deep you can nest your scopes; in Android application development however you should usually have at least one scope per screen in addition to the root scope, which we’ll discuss in a second.

Scopes are very easy to create and also very cheap, so it could also be useful to create additional scopes for certain, time-limited tasks, like a separate scope for a background service or even a scope for a specific process-intensive functionality that requires the instantiation of several classes that are not needed outside of this specific task. This way memory that is used by these classes can quickly be reclaimed by letting the particular scope and all its referenced class instances become subject for garbage collection shortly afterwards the task has been finished.

Creating the Root Scope

With the assembly point in place, we can start and actually create what Magnet calls the Root Scope. This – as the name suggests – is the root of any other scope that you might create. In this way it is comparable with what you’d usually call the application component in Dagger2, so you should create it in your application’s main class (your Application subclass in Android, for example) and keep a reference to it there.

We do this as well, but at the same time add a little abstraction to make it easier to retrieve a reference to this (and possibly other) scopes later on:

// ScopeOwner.kt

interface ScopeOwner {

val scope: Scope

}

val Context.scope: Scope

get() {

val owner = this as? ScopeOwner ?:

error("$this is expected to implement ScopeOwner")

return owner.scope

}

// MyApplication.kt

class MyApplication : Application(), ScopeOwner {

override val scope: Scope by lazy {

Magnet.createRootScope().apply {

bind(this@MyApplication as Application)

bind(this@MyApplication as Context)

}

}

}

You see that the root scope is created lazily, i.e. on first usage. While Magnet scopes aren’t as heavy as Dagger2 components on object creation, it’s still a good pattern to do this way.

In addition you see that – right after the root scope is created – we bind two instances into it, the application context and the application. The bind(T) method comes from magnet-kotlin and actually simply calls into a method whose signature is bind(Class<T>, T).

Creating subscopes

Once the root scope is available, you’re free to create additional sub-scopes for different purposes. This is done by calling scope.createSubscope(). A naive implementation of an “activity scope” could for example look like this:

class BaseMagnetActivity : AppCompatActivity, ScopeOwner {

override val scope: Scope by lazy {

application.scope.createSubscope()

}

}

But unfortunately this wouldn’t bring us very far, since this scope would be created and destroyed every time the underlying Activity would be restarted (e.g. on rotation). With AndroidX’s ViewModel library we however can create a scope that is not attached to the fragile Activity (or Fragment) , but to a separate ViewModel that is kept around and only destroyed when the user finishes the component or navigates away from it. While the glue code to set up such a thing is not yet part of Magnet, it’s no big wizardy to write it yourself. You might want to take some inspiration from my own solution.

Provisioning of instances

Above we’ve seen how we can bind existing instances of objects into a particular scope, but of course a DI library should allow you to automatically create new instances of classes without that you have to care about the details of the actual creation, like required parameters.

Magnet does this of course, and in addition to that also introduces a novel approach where it places the resulting instances in your object graph: Auto-scoping.

Auto-scoping means that Magnet is smart about figuring out what dependencies your new instance needs and in which scopes these instances themselves are placed. It then determines the top-most location in your scope tree your new instance can go to and places it exactly there. If the top-most location then happens to be the root scope, the instance becomes available globally. This mimics the behavior of Dagger2 when you annotate a type with @Singleton:

@Instance(type = Foo::class, scoping = Scoping.TOPMOST)

class Foo {}

It’s important to understand that with Magnet you only ever annotate types (and eventually pure functions, see below), but never constructors. This is a major gotcha when coming from Dagger2, where you place the @Inject annotation directly at the constructor that Dagger2 should use to create the instance of your type. This also means that Magnet is a bit picky and requires you to only have a single visible constructor for your type (package-protected or `internal` is possible as well), otherwise you’ll receive an error.

The optionScoping.TOPMOST, that you see in the example above, which triggers auto-scoping, is the default, so it can be omitted. Beside TOPMOST there is also DIRECT and UNSCOPED, which – as their name suggests – override the auto-scoping by placing an instance directly in the scope from which it was requested from (DIRECT) or not in any scope at all (UNSCOPED). The latter is very useful as a Factory pattern and can be compared with Dagger2’s Provider<Foo> feature.

Now, while this auto-scoping mechanism sounds awesome in first instnace, there might be times where you want to have a little more control what is going on. For example when you have a class that does not directly depend on anything in your current scope, but you still want to let it live in a specific (or “at-most-top-most”) scope, because it is not useful globally and would just take heap space if kept around. This can be achieved as well, simply by tagging a scope with a limit and applying the same tag to the provisioning you want to limit:

const val ACTIVITY_SCOPE = "activity-scope"

val scope = ...

scope.limit(ACTIVITY_SCOPE)

@Instance(type = Foo::class, scoping = Scoping.TOPMOST, limitedTo = ACTIVITY_SCOPE)

class Foo {}

With all that information, let’s discuss a few specific examples. Consider a scope setup consisting of the root scope, the sub-scope “A” (tagged with SCOPE_A ) which is a child of the root scope, and the sub-scope “B”, which is a child of the sub-scope “A”. Where do specific instances go to?

- New instance without dependencies and

scoping = TOPMOST – Your new instance goes directly into the root scope. - New instance with dependencies that themselves all live in the root scope and

scoping = TOPMOST – Your new instance goes directly into the root scope. - New instance with at least one dependency that lives in a sub-scope “A” and

scoping = TOPMOST - If requested from the root scope, Magnet will throw an error on runtime, because the specific dependency is not available in the root scope

- If requested from the sub-scope “A”, the new instance will go into the same scope

- If requested from the sub-scope “B”, the new instance will go into sub-scope “A”, the “top-most” scope that this instance can be in

- New instance without dependencies,

scoping = TOPMOST and limitedTo = SCOPE_A- If requested from the root scope, Magnet will throw an error on runtime, because the root scope is not tagged at all and there is no other parent scope available that Magnet could look for to match the limit configuration

- If requested from the sub-scope “A”, the new instance will go into the same scope

- If requested from the sub-scope “B”, the new instance will go into sub-scope “A”, the “top-most” scope that this instance is allowed to be in because of its limit configuration

- New instance with arbitrary dependencies and

scoping = DIRECT – Your instance goes directly into the scope from which you requested it - New instance with arbitrary dependencies and

scoping = UNSCOPED – Your instance is just created and does not become part of any scope

Provisioning of external types

Imagine you have some external dependency in your application that contains a class that you depend on in one of your own classes. In Dagger2 you have to write a custom provisioning method to make this type “known” to the DI. In Magnet this process is similar, as it does not use reflection to instantiate types, like Dagger2, so you also have to write such provisional methods.

But in case the library you’re integrating was itself built with Magnet, then Magnet already created something that it calls a “provisioning factory” and that was likely packaged within the library already. In this case, Magnet will find that packaged provisioning factory and you don’t need to write custom provision methods yourself!

So, how are these provisioning methods then exactly written? Well, it turns out Magnet’s @Instance annotation is not only allowed on types, but on pure (static, top-level) functions as well:

@Instance(type = Foo::class)

fun provideFoo(factory: Factory): Foo = factory.createFoo()

A best practice for me is to add all those single provisions to a separate file that I usually call StaticProvision.ktand that I put in the specific module’s root package. There it is easy to find and will not only contain the provision methods, but other global configurations / constants that might be needed for the DI setup.

Provision different instances of the same type

Magnet supports providing and injecting different instances of the same type in any scope. All injections and provisions we did so far used no classifier, Classifier.NONE, but this can easily be changed:

// Provision

internal const val BUSY_SUBJECT = "busy-subject"

@Instance(type = Subject::class, classifier = BUSY_SUBJECT)

internal fun provideBusySubject(): Subject<Boolean> =

PublishSubject.create<Boolean>()

// Injection

internal class MyViewModel(

@Classifier(BUSY_SUBJECT) private val busySubject: Subject<Boolean>

) : ViewModel { ... }

Of course you can also bind instances with a specific classifier, the bind(...) method accepts an optional classifier parameter where you can “tag” the instance of the type as well. This is for example useful if you want to bind Activity intent data or Fragment argument values into your scope, so that they can be used in other places.

Provisioning while hiding the implementation

You might have wondered why each @Instance provisional configuration repeated the type that was provided – the reason is that you can specify another base type (and even multiple base types!) you want your instance to satisfy. This allows you to easily hide your implementation and just have the interface “visible” in your dependency tree outside your module.

Consider the following:

interface FooCalculator {

fun calculate(): Foo

}

@Instance(type = FooCalculator::class)

internal class FooCalculatorImpl() : FooCalculator { ... }

While this is obviously something that Dagger2 allows you to do as well, the configuration is usually detached. You specify an abstract provision of FooCalculator in a FooModule, that with luck lives nearby the interface and implementation, but eventually it does not, because Dagger2 modules are tedious to write and most people reuse existing module definitions for all kinds of provisionings.

Magnet’s approach here is clean, concise and simple, so simple actually that I most of the time no longer separate interface and implementation into separate files, but keep them directly together.

Providing Scope

One not so obvious thing is that Magnet is able to provide the complete Scope a specific dependency lives in as dependency itself. This might seem to be counter-intuitive at first, as this makes Magnet look like a service locator implementation, but there are use cases where this becomes handy.

Imagine you have a scheduled job to execute and the worker of this job needs a specific set of classes to be instantiated and available during the execution of the job. It might be the case however that multiple workers might be kicked off in parallel, so each worker instance needs its own set of dependencies, as some of them are also holding state specific to the worker. How would one implement these requirements with Magnet?

Well, it looks like that we could create a sub-scope for each worker and keep them separated this way, like so:

@Instance(type = JobManager::class)

class JobManager(private val scope: Scope, private val executor: Executor) {

fun start(firstParam: Int, secondParam: String) {

val subScope = scope.createSubscope {

bind(firstParam)

bind(secondParam)

}

val worker: Worker = subScope.getSingle()

executor.execute(worker)

}

}

@Instance(type = Worker::class)

class Worker(private val firstParam: Int, secondParam: String): Runnable {

fun run() { ... }

}

Injecting dependencies

Now we’ve talked in great length about how you provide dependencies in Magnet, but how do you actually retrieve them once they are provided?

Magnet offers several ways to retrieve dependencies:

Scope.getSingle(Foo::class) (or a simple dependency on Foo in your class’ constructor) – This will try to retrieve a single instance of Foo while looking for it in the current scope and any parent scope. If it fails to find an instance, it will throw an exception on runtime. If several instances of Foo can be found / instantiated, it will also throw an exception.-

Scope.getOptional(Foo::class) (or a simple dependency on Foo? in your class’ constructor) – This will try to retrieve a single instance of Foo while looking for it in the current scope and any parent scope. If it fails to find an instance, it will return / inject null instead. If several instances of Foo can be found / instantiated, it will throw an exception. Scope.getMany(Foo::class) (or simple dependency on List<Foo> in your class’ constructor) – This will try to retrieve a multiple instances of Foo while, again, looking for it in the current scope and any parent scope. If no instance is provided, an empty list is returned / injected instead.

An important difference to Dagger2 here is that not the provisioning side determines whether a List of instances is available (in Dagger2 annotated with @Provides @IntoSet), but the injection side requests a list of things. Also, there is no way to provision a map of <key, value> pairs in Magnet, but this limitation is easy to circumvent with the provision of a List of instances of a custom type that resembles both, key and value:

interface TabPage {

val id: String

val title: String

}

@Instance(type = TagPagesHost::class)

internal class TagPagesHost(pages: List) {

private val tabPages: Map = pages.associateBy { it.id }

}

Optional features

Now you might not have noticed it in the last section, but the ability to retrieve optional dependencies in Magnet is actually quite powerful.

Imagine you have two modules in your application, foo and foo-impl. The foo module contains a public interface that foo-impl implements:

// `foo` module, FooManager.kt

interface FooManager {

fun doFoo()

}

// `foo-impl` module, FooManagerImpl.kt

@Instance(type = FooManager::class)

internal class FooManagerImpl() : FooManager {

fun doFoo() { ... }

}

Naturally, foo-impl depends on the foo module, but in your app module it’s enough that you depend on foo for the time being to already make use of the feature:

// `app` module, build.gradle

android {

productFlavors {

demo { ... }

full { ... }

}

}

dependencies {

implementation project(':foo')

}

// `app` module, MyActivity.kt

class MyActivity : BaseMagnetActivity() {

fun onCreate(state: Bundle) {

super.onCreate()

...

findViewById(R.id.some_button).setOnClickListener {

val fooManager: FooManager? = scope.getOptional()

fooManager?.doFoo()

}

}

Now if you then also make foo-impl available to the classpath (e.g. through a different build variant or a dynamic feature implementation), your calling code above will continue to work without changes:

// `app` module, build.gradle

dependencies {

productionImplementation project(':foo-impl')

}

How cool is that?

Remember though that this technique only works on the specific module that acts as assembly point (see above), so in case you have a more complex module dependency hierarchy you can’t manage optional features in a nested manner.

App extensions

AppExtensions is a small feature that is packaged as an additional module in Magnet. It allows you to extract all code you typically keep in application class into separate extensions by their functionality to keep application class clean and “open for extension and closed for modification” (Open-Closed principle). Here is how you’d set it up:

// `app` module, build.gradle

dependencies {

implementation "de.halfbit:magnetx-app:3.3-rc7"

}

Then add the following code into your Application subclass:

class MyApplication : Application(), ScopeOwner {

...

private lateinit var extensions: AppExtension.Delegate

override fun onCreate() {

super.onCreate()

extensions = scope.getSingle()

extensions.onCreate()

}

override fun onTrimMemory(level: Int) {

extensions.onTrimMemory(level)

super.onTrimMemory(level)

}

}

There are many AppExtensions available, e.g. for LeakCanary, and you can even write your own. Try it out!

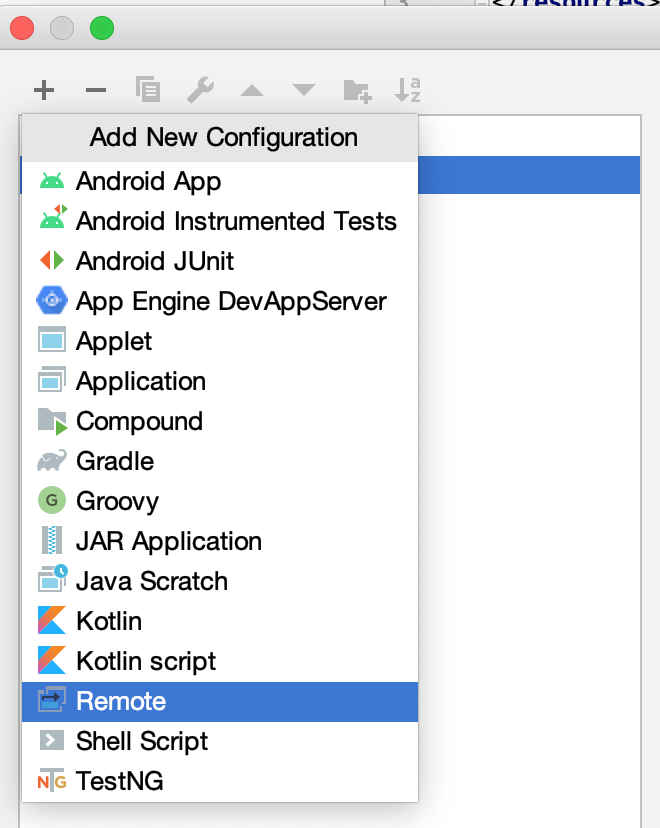

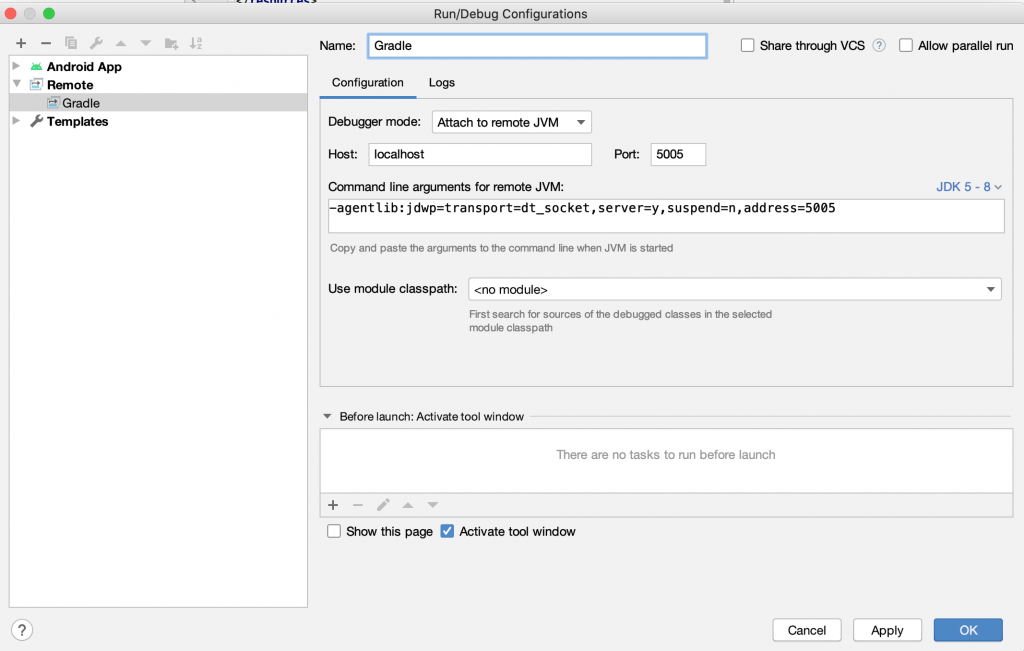

Debugging Magnet

Due to its dynamic nature it might not always be totally obvious in which scope a certain instance lives in. That is where Magnet’s Stetho support comes in handy.

At first add the following two dependencies into your app’s debug configuration:

// `app` module, build.gradle

dependencies {

debugImplementation "de.halfbit:magnetx-app-stetho-scope:3.3-rc7"

debugImplementation "com.facebook.stetho:stetho:1.5.1"

}

This will add an app extension to Magnet that contains some initialization code to connect to Stetho and dump the contents of all scopes to it. In order to have the initialization code being executed, your Application class needs to have the AppExtensions code as shown in the previous section.

Now when you run your application, you can inspect it with Stetho’s dumpapp tool (just copy the dumpapp script and stetho_open.py into your project tree from here):

$ scripts/dumpapp -p my.cool.app magnet scope

Note that you need to have an active ADB connection for this to work. If you stumble upon errors, check first if adb devices shows the device you want to debug and eventually restart the ADB server / reconnect the device if this is not the case. The output then looks like this:

[1] magnet.internal.MagnetScope@1daafe1

BOUND Application my.cool.app.MyApplication@2906100

BOUND Context my.cool.app.MyApplication@2906100

TOPMOST SomeDependency my.cool.app.SomeDependency@2bd93c7

...

[2] magnet.internal.MagnetScope@f6213e5

BOUND CompositeDisposable io.reactivex.disposables.CompositeDisposable@6f06eba

...

[3] magnet.internal.MagnetScope@4c964c8

BOUND CompositeDisposable io.reactivex.disposables.CompositeDisposable@1250961

TOPMOST SomeFragmentDependency my.cool.app.SomeFragmentDependency@7a6bc86

...

[3] magnet.internal.MagnetScope@d740574

BOUND CompositeDisposable io.reactivex.disposables.CompositeDisposable@fc7da9d

TOPMOST SomeFragmentDependency my.cool.app.SomeFragmentDependency@bf4ff74

...

The number in [] brackets determines the scope level, where [1] stands for the root scope, [2] for an activity scope and [3] for a fragment scope in this example. Then the type of binding is written in upper-case letters; things that are manually bound to the scope via Scope.bind() are denoted as BOUND, things that are automatically bound to a specific scope / level are denoted as TOPMOST and finally things that are directly bound to a specific scope are denoted as DIRECT. Instances that are scoped with UNSCOPED aren’t listed here, because as we learned, are not bound to any scope.

Roundup

Magnet is a powerful, easy-to-use DI solution for any application, but primarily targeted on large multi-module mobile apps.

There are a few more advanced features that I haven’t covered here, like selector-based injection. I’ll leave this as an exercise for the reader to explore and try out for her/himself 🙂

Anyways, if you made it until here, please give Magnet a chance and try it out. Due to it’s non-pervasive nature it can co-exist with other solutions side-by-side, so you don’t have to convert existing applications all at once.

Many thanks to Sergej Shafarenka, the author of Magnet, for proofreading this blog.